Automatic Generation of Immersive Audio Based on User Feedback

Participants: Sungyoung Kim, Rai Sato, Minjae Kim, Kangeun Lee

Research Sponsor: National Research Foundation | Duration: 2023 - 2026

Research Summary:This research project focuses on advancing immersive auditory acoustic technologies. We aim to gather data and reconstruct spatial audio effects based on biometric feedback measuring immersiveness. Our spatialization process includes enhancing spatial sound experiences through reverb generation and developing an automated audio panning system. Additionally, we evaluate the impact of these sound effects and immersive auditory technologies, designed around user interactions, on their effectiveness in increasing immersion in personal broadcasting and audio-centered content. By understanding how these technologies enhance user engagement and immersion, we seek to significantly improve experiences in various media environments.

Relevant Publications

- Lee, K., Park, H., & Kim, S. Y. (2024). Research on depth information based object-tracking and stage size estimation for immersive audio panning. The Journal of the Acoustical Society of Korea, 43(5), 529–535.

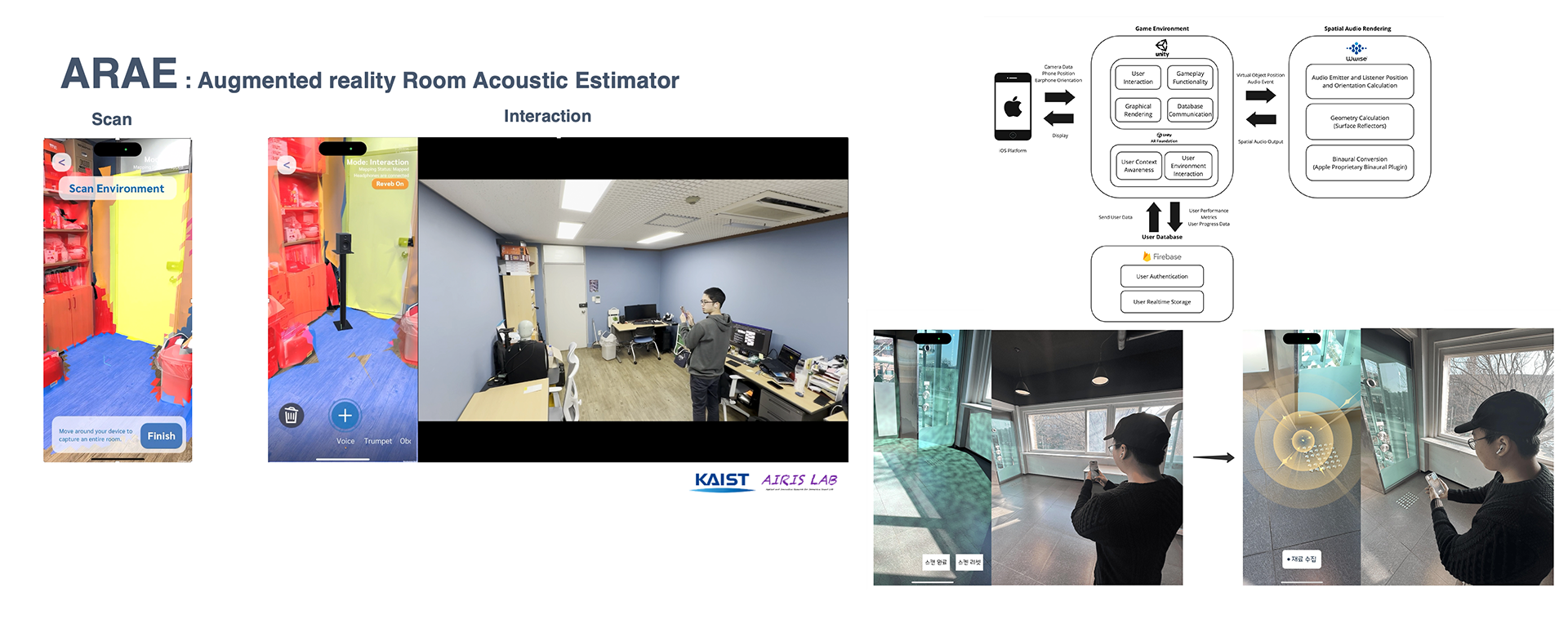

- Sato, R., Izumi, Y., Lee, K., & Kim, S. Y. (2024, April). Automatic acoustical material estimation for room-specific early reflections in auditory augmented reality (AAR) application. In Proceedings of the AES 6th International Conference on Audio for Games. Audio Engineering Society.

- Sato, R., & Kim, S. Y. (2023, October). Subjective evaluation on the sense of “being there” using Augmented Reality Room Acoustic Estimator (ARAE) platform. In Adjunct Proceedings of the 22nd IEEE International Symposium on Mixed and Augmented Reality (ISMAR Adjunct). IEEE.

Augmented Reality-Based Auditory Training and its impact on the quality of hearing

Participants: Sungyoung Kim, Rai Sato, Pooseung Koh, Kangeun Lee

Research Sponsor: National Research Foundation | Duration: 2024 - 2029

Research Summary: This research initiative aims to develop a gamified augmented reality (AR) based auditory selective attention training application to improve speech perception in noise and sound localization abilities in order to combat the side effects of hearing loss. The project involves collaboration with Hanrim Sacred Heart Hospital and University of Iowa. The ultimate goal is to create an accessible, sustainable, and user-optimized training game that can be integrated into daily life.

Specifically, the project aims to:

- Improve auditory selective attention through interactions with augmented sounds integrated into the user's real living spaces.

- Investigate how enhanced auditory selective attention impacts speech perception in noise and sound localization.

- Develop a personalized training manager system that provides customized content for each user.

- Incorporate multi-user gamification elements to increase the effectiveness of the training.

Relevant Publication

A New Technical Ear Training Game and Its Effect on Critical Listening Skills

Real-time XR Interface Technology Development for Environmental Adaptation

Participants: Sungyoung Kim, Rai Sato, Minjae Kim, Jongho Lee

Research Sponsor: Institute for Information & Communication Technology Planning & Evaluation | Duration: 2024 - 2026

Research Summary: This research initiative aims to develop a metaverse economic platform that seamlessly integrates real and virtual environments through the use of XR glasses, transcending spatial limitations via meta-objects. By generating and managing meta-objects with various inherent attributes, the project focuses on providing immersive audiovisual and haptic feedback based on users' gestures and subtle movements. Our specific contributions to this project involve the advancement of sound and spatial audio implementation within the XR real-time interface.

Quantitative Assessment of Acoustical Attributes and Listener Preferences in Binaural Renderers

Participants: Sungyoung Kim, Rai Sato

Research Sponsor: | Duration: 2022 - 2025

Research Summary:This study investigates the perceptual factors that determine listener preference when evaluating head-tracked binaural audio through headphones. While numerous software options, or renderers, exist to create these immersive experiences, they can produce markedly different sound images from the same source material, making it challenging for users to select the most suitable and preferable option. This research is expected to provide objective information on the perceptual profiles of various renderers, thereby helping users select a tool that aligns with their intentions and preferences. Furthermore, by analyzing the trade-offs and listener-dependent weighting of perceptual attributes, the study aims to offer a guide for developers to achieve an "optimal perceptual balance," which can contribute to future acoustic design and personalization technology.

Relevant Publication

- Sato, R., & Kim, S. Y. (2025). Perceptual factors influencing listener preferences in head-tracked binaural renderers. In Proceedings of the IEEE International Conference on Immersive and 3D Audio (I3DA), (under-review).

- Sato, R., Komkris, P., Kim, S. J., Park, Y., & Kim, S. Y. (2024, October). Quantitative assessment of acoustical attributes and listener preferences in binaural renderers with head-tracking function. In Proceedings of the Audio Engineering Society (AES) 157th Convention. Audio Engineering Society.

- Sato, R., Takeuchi, A., Shim, H., & Kim, S. Y. (2023, October). Quantitative assessment of acoustical attributes and listener preferences in binaural renderers. In Proceedings of the Audio Engineering Society (AES) 155th Convention.

Presentation of Aural Heritage with 6DoF Audio

Participants: Sungyoung Kim, Kyungtaek Oh, Rai Sato, Sungjoon Kim, Pooseung Koh

Research Summary: This project focuses on the preservation and revitalization of aural heritage through innovative recording techniques and virtual reality (VR) technologies. Our team is dedicated to capturing aural heritage using advanced microphone recording methods, aiming to recreate six degrees of freedom (6DoF) audio environments. This approach allows users to experience aural heritage in VR with unprecedented realism and immersion, moving freely within the virtual environment as if they were physically present in the original locations.

This project not only serves as a vital tool for cultural preservation but also paves the way for future innovations in immersive media. The outcomes of this research have the potential to influence a wide range of applications, from educational programs to interactive entertainment, enhancing the way people connect with and experience aural heritage.

Demo video: https://www.youtube.com/watch?v=2dpa8prWEZg